From Wikipedia, the free

encyclopedia

"Bio-energy" redirects here. For the term

bio-energy in the context of non-mechanist

philosophy or alternative medicine, see

Vitalism.

Biofuel can be broadly defined as solid,

liquid, or gas

fuel derived from recently dead

biological material.This distinguishes it from

fossil fuels, which are

derived from long dead biological material.

Biofuel can be theoretically produced from any (biological)

carbon source, though the most common by far is

photosynthetic

plants. Many different plants and plant-derived

materials are used for biofuel manufacture. Biofuels

are used globally, most commonly to power

vehicles and cooking stoves. Biofuel industries

are expanding in Europe, Asia and the Americas.

Biofuels offer the possibility of producing

energy without a net increase of carbon into the

atmosphere because the plants used in to produce the

fuel have

removed CO2 from the atmosphere,

unlike fossil fuels which return carbon which was

stored beneath the surface for millions of years

into the air. Biofuel is therefore more nearly

carbon neutral and less likely increase

atmospheric concentrations of

greenhouse gases (though doubts have been raised

as to whether this benefit can be achieved in

practice,

see below). The use of biofuels also reduces

dependence on petroleum and enhances

energy security.

There are two common strategies of producing

biofuels. One is to grow crops high in either sugar

(sugar

cane,

sugar beet, and

sweet sorghum) or

starch (corn/maize),

and then use

yeast fermentation to produce ethyl alcohol (ethanol).

The second is to grow plants that contain high

amounts of vegetable oil, such as

oil palm,

soybean,

algae, or

jatropha. When these oils are heated, their

viscosity is reduced, and they can be burned

directly in a

diesel engine, or the oils can be chemically

processed to produce fuels such as

biodiesel. Wood and its byproducts can also be

converted into biofuels such as

woodgas,

methanol or

ethanol fuel. It is also possible to make

cellulosic ethanol from non-edible plant parts,

but this can be difficult to accomplish

economically.

Biofuels are discussed as having significant

roles in a variety of international issues,

including: mitigation of

carbon emissions levels and

oil prices, the "food

vs fuel" debate,

deforestation and

soil erosion, impact on

water resources, and energy balance and

efficiency.

History and

policy

Humans have used biomass fuels in the form of

solid biofuels for heating and cooking since the

discovery of fire. Following the discovery of

electricity, it became possible to use biofuels to

generate electrical power as well. However, the

discovery and use of

fossil fuels:

coal,

gas and

oil, have dramatically reduced the amount of

biomass fuel used in the developed world for

transport, heat and power. biofuels-p2.html National

Geographic, Green Dreams, Oct 2007]

However, when large

supplies of

crude oil were discovered in

Pennsylvania and

Texas,

petroleum based fuels became inexpensive, and

soon were widely used. Cars and trucks began using

fuels derived from

mineral oil/petroleum:

gasoline/petrol

or

diesel.

Nevertheless, before

World War II, and during the high demand wartime

period, biofuels were valued as a strategic

alternative to imported oil. Wartime Germany

experienced extreme oil shortages, and many energy

innovations resulted. This includes the powering of

some of its vehicles using a blend of gasoline with

alcohol fermented from potatoes, called Monopolin.[citation

needed] In Britain,

grain alcohol was blended with

petrol by the

Distillers Company Limited under the name

Discol, and marketed through

Esso's affiliate Cleveland.[citation

needed]

During the peacetime post-war period,

inexpensive oil from the

Middle East contributed in part to the lessened

economic and geopolitical interest in biofuels. Then

in 1973 and 1979, geopolitical conflict in the

Middle East caused

OPEC to cut exports, and non-OPEC nations

experienced a very large decrease in their oil

supply. This "energy

crisis" resulted in severe shortages, and a

sharp increase in the prices of high

demand oil-based products, notably

petrol/gasoline.

There was also increased interest from governments

and academics in energy issues and biofuels.

Throughout history, the fluctuations of

supply and demand,

energy policy,

military conflict, and the environmental

impacts, have all contributed to a highly complex

and volatile market for energy and fuel.

In the year 2000 and beyond, renewed interest

in biofuels has been seen. The drivers for biofuel

research and development include rising oil

prices, concerns over the potential

oil peak,

greenhouse gas emissions (causing

global warming and

climate change), rural development interests,

and instability in the Middle East.

Biomass

-

Biomass is material derived from recently

living

organisms. This includes plants, animals and

their by-products. For example, manure, garden waste

and crop residues are all sources of biomass. It is

a

renewable energy source based on the

carbon cycle, unlike other

natural resources such as

petroleum,

coal, and

nuclear fuels.

Animal waste is a persistent and unavoidable

pollutant produced primarily by the animals housed

in industrial sized farms. Researchers from

Washington University have figured out a way to turn

manure into

biomass. In April 2008 with the help of imaging

technology they noticed that vigorous mixing helps

microorganisms turn farm waste into alternative

energy, providing farmers with a simple way to treat

their waste and convert it into energy.

There are also

agricultural products specifically grown for

biofuel production include

corn,

switchgrass, and

soybeans, primarily in the United States;

rapeseed,

wheat and

sugar beet primarily in Europe;

sugar cane in Brazil;

palm oil and

miscanthus in South-East Asia;

sorghum and

cassava in China; and

jatropha in India.

Hemp has also been proven to work as a biofuel.

Biodegradable outputs from industry,

agriculture, forestry and households can be used for

biofuel production, either using

anaerobic digestion to produce

biogas, or using

second generation biofuels; examples include

straw, timber, manure, rice husks, sewage, and food

waste. The use of biomass fuels can therefore

contribute to waste management as well as fuel

security and help to prevent climate change, though

alone they are not a comprehensive solution to these

problems.

Bio energy from waste

Using waste biomass to produce energy can

reduce the use of fossil fuels, reduce greenhouse

gas emissions and reduce pollution and waste

management problems. A recent publication by the

European Union highlighted the potential for

waste-derived bioenergy to contribute to the

reduction of global warming. The report concluded

that 19 million tons of oil equivalent is available

from biomass by 2020, 46% from bio-wastes: municipal

solid waste (MSW), agricultural residues, farm waste

and other biodegradable waste streams.

Landfill sites generate gases as the waste

buried in them undergoes

anaerobic digestion. These gases are known

collectively as

landfill gas (LFG). This can be burned and is

considered a source of renewable energy, even though

landfill disposal are often non-sustainable.

Landfill gas can be burned either directly for heat

or to generate

electricity for public consumption. Landfill gas

contains approximately 50% methane, the same gas

that is found in

natural gas.

Biomass can come from waste plant material. If

landfill gas is not harvested, it escapes into the

atmosphere: this is not desirable because methane is

a greenhouse gas, with more

global warming potential than carbon dioxide.

Over a time span of 100 years, methane has a global

warming potential of 23 relative to CO2.

Therefore, during this time, one ton of methane

produces the same greenhouse gas (GHG) effect as 23

tons of CO2.[citation

needed] When methane burns the

formula is CH4 + 2O2 = CO2

+ 2H2O So by harvesting and burning

landfill gas, its global warming potential is

reduced a factor of 23, in addition to providing

energy for

heat and power.

Frank Keppler and Thomas Rockmann discovered

that living plants also produce methane CH4.

The amount of methane produced by living plants is

10 to 100 times greater than that produced by dead

plants (in an aerobic environment) but does not

increase global warming because of the

carbon cycle.

Anaerobic digestion can be used as a distinct

waste management strategy to reduce the amount of

waste sent to landfill and generate methane, or

biogas. Any form of biomass can be used in

anaerobic digestion and will break down to

produce

methane, which can be harvested and burned to

generate heat, power or to power certain automotive

vehicles.

A 3 MW landfill power plant would power 1,900

homes. It would eliminate 6,000 tons per year of

methane from getting into the environment..

It would eliminate 18,000 tons per year of CO2

from fossil fuel replacement.. This is

the same as removing 25,000 cars from the road,

or planting 36,000 acres (146 km²) of forest,

or not using 305,000 barrels (48,500 m³) of oil per

year.

Liquid fuels for

transportation

Most transportation fuels are liquids, because

vehicles usually require high

energy density, as occurs in

liquids and

solids. Vehicles usually need high

power density as can be provided most

inexpensively by an

internal combustion engine. These engines

require clean burning fuels, in order to keep the

engine clean and minimize

air pollution. The fuels that are easier to burn

cleanly are typically liquids and

gases. Thus liquids (and gases that can be

stored in liquid form) meet the requirements of

being both portable and clean burning. Also, liquids

and gases can be

pumped, which means handling is easily

mechanized, and thus less laborious.

Types of biofuels

First generation

biofuels

'First-generation biofuels' refer to biofuels

made from

sugar,

starch,

vegetable oil, or

animal fats using conventional technology.

The basic feedstocks for the production of

first generation biofuels are often seeds or grains

such as wheat, which yields starch that is fermented

into bioethanol, or sunflower seeds, which are

pressed to yield vegetable oil that can be used in

biodiesel. These feedstocks could also enter the

animal or human food chain, and as the global

population has risen their use in producing biofuels

has been criticised for diverting food away from the

human food chain, leading to food shortages and

price rises.

The most common first generation biofuels are

listed below.

Vegetable oil

-

Edible vegetable oil is generally not used as

fuel, but lower quality oil can be used for this

purpose. Used vegetable oil is increasingly being

processed into biodiesel, or (less frequently)

cleaned of water and particulates and used as a

fuel. To ensure that the fuel injectors atomize the

fuel in the correct pattern for efficient

combustion, vegetable oil fuel must be heated to

reduce its viscosity to that of diesel, either by

electric coils or heat exchangers. This is easier in

warm or temperate climates.

MAN B&W Diesel,

Wartsila and

Deutz AG offer engines that are compatible with

straight vegetable oil, without the need for after

market modifications. Vegetable oil can also be used

in many older diesel engines that do not use common

rail or unit injection electronic diesel injection

systems. Due to the design of the combustion

chambers in

indirect injection engines, these are the best

engines for use with vegetable oil. This system

allows the relatively larger oil molecules more time

to burn. However, a number of drivers have

successfully experimented with earlier pre- "pumpe

duse" VW TDI engines and other similar engines with

direct injection.

Biodiesel

-

Biodiesel is the most common biofuel in

Europe. It is produced from

oils or fats using

transesterification and is a liquid similar in

composition to fossil/mineral diesel. Its chemical

name is fatty acid methyl (or ethyl) ester (FAME).

Oils are mixed with sodium hydroxide and methanol

(or ethanol) and the chemical reaction produces

biodiesel (FAME) and

glycerol. One part glycerol is produced for

every 10 parts biodiesel. Feedstocks for biodiesel

include animal fats, vegetable oils,

soy,

rapeseed,

jatropha,

mahua,

mustard,

flax,

sunflower,

palm oil,

hemp,

field pennycress, and

algae. Pure biodiesel (B100) is by far the

lowest emission diesel fuel. Although

liquefied petroleum gas and hydrogen have

cleaner combustion, they are used to fuel much less

efficient petrol engines and are not as widely

available.

Biodiesel can be used in any

diesel engine when mixed with mineral diesel.

The majority of vehicle manufacturers limit their

recommendations to 15% biodiesel blended with

mineral diesel. In some countries manufacturers

cover their diesel engines under warranty for B100

use, although Volkswagen of Germany, for example,

asks drivers to make a telephone check with the VW

environmental services department before switching

to B100. B100 may become more viscous at lower

temperatures, depending on the feedstock used,

requiring vehicles to have fuel line heaters. In

most cases biodiesel is compatible with diesel

engines from 1994 onwards, which use 'Viton' (by

DuPont) synthetic rubber in their mechanical

injection systems. Electronically controlled 'common

rail' and 'pump duse' type systems from the late 90s

onwards (whose finely metered and atomized

multi-stage injection systems are very sensitive to

the viscosity of the fuel), may only use biodiesel

blended with conventional diesel fuel. Many of the

current generation of diesel engines are made so

that they can run on B100 without altering the

engine itself, although this can be dependent on the

fuel rail design.

Since biodiesel is an effective solvent and

cleans residues deposited by mineral diesel, engine

filters may need to be replaced more often as the

biofuel dissolves old deposits in the fuel tank and

pipes. It also effectively cleans the engine

combustion chamber of carbon deposits, helping to

maintain efficiency. In many European countries, a

5% biodiesel blend is widely used and is available

at thousands of gas stations. Biodiesel is also an

oxygenated fuel, meaning that it contains a

reduced amount of carbon and higher hydrogen and

oxygen content than fossil diesel. This improves the

combustion of fossil diesel and reduces the

particulate emissions from un-burnt carbon.

In the USA, more than 80% of commercial trucks

and city buses run on diesel. Therefore there is an

emerging U.S. biodiesel market, estimated to have

grown 200 percent from 2004 to 2005. "By the end of

2006 biodiesel production was estimated to increase

fourfold [from 2004] to more than 1 billion

gallons,".

Bioalcohols

-

Main article:

Alcohol fuel

Biologically produced

alcohols, most commonly

ethanol, and less commonly

propanol and

butanol, are produced by the action of

microorganisms and

enzymes through the fermentation of sugars or

starches (easiest), or cellulose (which is more

difficult).

Biobutanol (also called biogasoline) is often

claimed to provide a direct replacement for

gasoline, because it can be used directly in a

gasoline engine (in a similar way to biodiesel in

diesel engines).

Butanol is formed by

ABE fermentation (acetone, butanol, ethanol) and

experimental modifications of the process show

potentially high net energy gains with butanol as

the only liquid product. Butanol will produce more

energy and allegedly can be burned "straight" in

existing gasoline engines (without modification to

the engine or car), and is less corrosive and less

water soluble than ethanol, and could be distributed

via existing infrastructures.

DuPont and

BP are working together to help develop Butanol.

Ethanol fuel is the most common biofuel

worldwide, particularly

in Brazil.

Alcohol fuels are produced by fermentation of

sugars derived from

wheat,

corn,

sugar beets,

sugar cane,

molasses and any sugar or starch that

alcoholic beverages can be made from (like

potato and

fruit waste, etc.). The

ethanol production methods used are

enzyme digestion (to release sugars from stored

starches,

fermentation of the sugars,

distillation and

drying. The distillation process requires

significant energy input for heat (often

unsustainable

natural gas

fossil fuel, but cellulosic biomass such as

bagasse, the waste left after sugar cane is

pressed to extract its juice, can also be used more

sustainably).

Ethanol can be used in petrol engines as a

replacement for

gasoline; it can be mixed with gasoline to any

percentage. Most existing automobile petrol engines

can run on blends of up to 15% bioethanol with

petroleum/gasoline. Gasoline with ethanol added has

higher

octane, which means that your engine can

typically burn hotter and more efficiently. In high

altitude (thin air) locations, some states mandate a

mix of gasoline and ethanol as a winter

oxidizer to reduce atmospheric pollution

emissions.

Ethanol fuel has less

BTU energy content, which means it takes more

fuel (volume and mass) to go the same distance.

More-expensive premium fuels contain less, or no,

ethanol. In high-compression engines, less ethanol,

slower-burning premium fuel is required to avoid

harmful

pre-ignition (knocking). Very-expensive aviation

gasoline (Avgas) is 100 octane made from 100%

petroleum. The high price of zero-ethanol Avgas does

not include federal-and-state road-use taxes.

Ethanol is very

corrosive to fuel systems, rubber

hoses-and-gaskets,

aluminum, and

combustion chambers. It is therefore illegal to

use fuels containing alcohol in aircraft (although

at least one model of ethanol-powered aircraft has

been developed, the

Embraer EMB 202 Ipanema). Ethanol is

incompatible with marine fiberglass fuel tanks (it

makes them leak). For higher ethanol percentage

blends, and 100% ethanol vehicles, engine

modifications are required.

Corrosive ethanol cannot be transported in

petroleum pipelines, so more-expensive over-the-road

stainless-steel tank trucks increase the cost and

energy consumption required to deliver ethanol to

the customer at the pump.

In the current alcohol-from-corn production

model in the United States, considering the total

energy consumed by

farm equipment, cultivation, planting,

fertilizers,

pesticides,

herbicides, and

fungicides made from petroleum,

irrigation systems, harvesting, transport of

feedstock to processing plants,

fermentation,

distillation, drying, transport to fuel

terminals and retail pumps, and lower

ethanol fuel energy content, the net energy

content value added and delivered to consumers is

very small. And, the net benefit (all things

considered) does little to reduce un-sustainable

imported oil and fossil fuels required to produce

the ethanol.

Many car manufacturers are now producing

flexible-fuel vehicles (FFV's), which can safely

run on any combination of bioethanol and petrol, up

to 100% bioethanol. They dynamically sense exhaust

oxygen content, and adjust the engine's computer

systems, spark, and fuel injection accordingly. This

adds initial cost and ongoing increased vehicle

maintenance. Efficiency falls and pollution

emissions increase when FFV system maintenance is

needed (regardless of the 0%-to-100% ethanol mix

being used), but not performed (as with all

vehicles). FFV

internal combustion engines are becoming

increasingly complex, as are multiple-propulsion-system

FFV

hybrid vehicles, which impacts cost,

maintenance,

reliability, and useful lifetime

longevity

Alcohol mixes with both petroleum and with

water, so

ethanol fuels are often diluted after the drying

process by absorbing environmental moisture from the

atmosphere. Water in alcohol-mix fuels reduces

efficiency, makes engines harder to start, causes

intermittent operation (sputtering), and oxidizes

aluminum (carburetors)

and steel components (rust).

Even dry ethanol has roughly one-third lower

energy content per unit of volume compared to

gasoline, so larger / heavier fuel tanks are

required to travel the same distance, or more fuel

stops are required. With large current un-sustainable,

non-scalable

subsidies,

ethanol fuel still costs much more per unit of

distance traveled than current high gasoline prices

in the United States.

Methanol is currently produced from

natural gas, a non-renewable

fossil fuel. It can also be produced from

biomass as biomethanol. The

methanol economy is an interesting alternative

to the

hydrogen economy, compared to today's hydrogen

produced from

natural gas, but not

hydrogen production directly from water and

state-of-the-art clean

solar thermal energy processes.

BioGas

-

Biogas is produced by the process of

anaerobic digestion of

organic material by

anaerobes. It can be produced either from

biodegradable waste materials or by the use of

energy crops fed into

anaerobic digesters to supplement gas yields.

The solid byproduct,

digestate, can be used as a biofuel or a

fertilizer. In the UK, the National Coal Board

experimented with microorganisms that digested coal

in situ converting it directly to gases such as

methane.

Biogas contains

methane and can be recovered from industrial

anaerobic digesters and

mechanical biological treatment systems.

Landfill gas is a less clean form of biogas which is

produced in

landfills through naturally occurring anaerobic

digestion. If it escapes into the atmosphere it is a

potent

greenhouse gas.

Oils and gases can be produced from various

biological wastes:

-

Thermal depolymerization of waste can extract

methane and other oils similar to petroleum.

-

GreenFuel Technologies Corporation developed a

patented bioreactor system that uses nontoxic

photosynthetic algae to take in smokestacks flue

gases and produce biofuels such as biodiesel,

biogas and a dry fuel comparable to coal.

Solid biofuels

Examples include wood, grass cuttings,

domestic refuse, charcoal, and dried

manure.

Syngas

-

Main article:

Gasification

Syngas is produced by the combined processes of

pyrolysis, combustion, and

gasification. Biofuel is converted into

carbon monoxide and energy by pyrolysis. A

limited supply of oxygen is introduced to support

combustion. Gasification converts further organic

material to hydrogen and additional carbon monoxide.

The resulting gas mixture, syngas, is itself a

fuel. Using the syngas is more efficient than direct

combustion of the original biofuel; more of the

energy contained in the fuel is extracted.

Syngas may be burned directly in internal

combustion engines. The

wood gas generator is a wood-fueled gasification

reactor mounted on an internal combustion engine.

Syngas can be used to produce

methanol and

hydrogen, or converted via the

Fischer-Tropsch process to produce a synthetic

petroleum substitute. Gasification normally

relies on temperatures >700°C. Lower temperature

gasification is desirable when co-producing

biochar.

Second generation

biofuels

-

Supporters of biofuels claim that a more

viable solution is to increase political and

industrial support for, and rapidity of,

second-generation biofuel implementation from

non food crops, including

cellulosic biofuels.[18]

Second-generation biofuel production processes can

use a variety of

non food crops. These include waste biomass, the

stalks of wheat, corn, wood, and

special-energy-or-biomass crops (e.g.

Miscanthus). Second generation (2G) biofuels use

biomass to liquid technology, including

cellulosic biofuels from

non food crops.[19]

Many second generation biofuels are under

development such as

biohydrogen,

biomethanol,

DMF, Bio-DME,

Fischer-Tropsch diesel, biohydrogen diesel,

mixed alcohols and wood diesel.

Cellulosic ethanol production uses

non food crops or inedible waste products and

does not divert food away from the animal or human

food chain.

Lignocellulose is the "woody" structural

material of plants. This feedstock is abundant and

diverse, and in some cases (like citrus peels or

sawdust) it is a significant disposal problem.

Producing

ethanol from

cellulose is a difficult technical problem to

solve. In nature,

Ruminant livestock (like

cattle) eat grass and then use slow enzymatic

digestive processes to break it into

glucose (sugar). In

cellulosic ethanol laboratories, various

experimental processes are being developed to do

the same thing, and then the sugars released can be

fermented to make ethanol fuel.

Scientists also work on experimental

recombinant DNA

genetic engineering organisms that could

increase biofuel potential.

Third generation

biofuels

-

Algae fuel, also called oilgae or third

generation biofuel, is a biofuel from

algae. Algae are low-input/high-yield (30 times

more energy per acre than land)

feedstocks to produce biofuels and algae fuel

are

biodegradable:

- One advantage of many biofuels over most

other fuel types is that they are

biodegradable, and so relatively harmless to

the environment if spilled.

Second and third generation biofuels are also

called advanced biofuels.

On the other hand, an appearing fourth

generation is based in the conversion of

vegoil and

biodiesel into gasoline.

Fourth generation

biofuels

Craig Venter's company

Synthetic Genomics is genetically engineering

microorganisms to produce fuel directly from

carbon dioxide on an industrial scale.

Biofuels by country

Recognizing the importance of implementing

bioenergy, there are international organizations

such as IEA Bioenergy,

established in 1978 by the

OECD

International Energy Agency (IEA), with the aim

of improving cooperation and information exchange

between countries that have national programs in

bioenergy research, development and deployment. The

U.N. International Biofuels Forum is formed by

Brazil,

China,

India,

South Africa, the

United States and the

European Commission. The world leaders in

biofuel development and use are Brazil, United

States, France, Sweden and Germany.

- See

also:

Biodiesel around the world

Israel

IC Green Energy, a subsidiary of Israel Corp.,

aims by 2012 to process 4-5% of the global biofuel

market (~4 million tons). It is focused solely on

non-edible feedstock such as Jatropha, Castor,

cellulosic biomass and algae. In June 2008, Tel

Aviv-based Seambiotic and Seattle-based Inventure

Chemical announced a joint venture to use CO2

emissions-fed algae to make ethanol and biodiesel at

a biofuel plant in Israel.

China

In

China, the government is making E10 blends

mandatory in five provinces that account for 16% of

the nation's passenger cars. In Southeast Asia,

Thailand has mandated an ambitious 10% ethanol

mix in gasoline starting in 2007. For similar

reasons, the palm oil industry plans to supply an

increasing portion of national diesel fuel

requirements in

Malaysia and

Indonesia. In

Canada, the government aims for 45% of the

country’s gasoline consumption to contain 10%

ethanol by 2010.

India

-

In India, a bioethanol program calls for E5

blends throughout most of the country targeting to

raise this requirement to E10 and then E20.

Europe

The

European Union in its

biofuels directive (updated 2006) has set the

goal that for 2010 that each member state should

achieve at least 5.75% biofuel usage of all used

traffic fuel. By 2020 the figure should be 10%. As

of January 2008 these aims are being reconsidered in

light of certain environmental and social concerns

associated with biofuels such as rising food prices

and deforestation.

France

France is the second largest biofuel consumer

among the EU States in 2006. According to the

Ministry of Industry, France's consumption increased

by 62.7% to reach 682,000

toe (i.e. 1.6% of French fuel consumption).

Biodiesel represents the largest share of this (78%,

far ahead of bioethanol with 22%). The

unquestionable biodiesel leader in Europe is the

French company

Diester Industrie. In bioethanol, the French

agro-industrial group Téréos is increasing its

production capacities. Germany itself remained the

largest European biofuel consumer, with a

consumption estimate of 2.8 million tons of

biodiesel (equivalent to 2,408,000 toe), 0.71

million ton of vegetable oil (628.492 toe) and 0.48

million ton of bioethanol (307,200 toe).

Germany

The biggest biodiesel German company is

ADM Ölmühle Hamburg AG, which is a subsidiary of

the American group

Archer Daniels Midland Company. Among the other

large German producers,

MUW (Mitteldeutsche Umesterungswerke GmbH & Co

KG) and EOP Biodiesel AG. A major contender in terms

of bioethanol production is the German sugar

corporation,

Südzucker.

Spain

The Spanish group

Abengoa, via its American subsidiary Abengoa

Bioenergy, is the European leader in production of

bioethanol.

Sweden

-

The government in Sweden has together with BIL

Sweden, the national association for the automobile

industry, that are the automakers in Sweden started

the work to end oil dependency. One-fifth of cars in

Stockholm can run on alternative fuels, mostly

ethanol fuel. Also

Stockholm will introduce a fleet of Swedish-made

hybrid ethanol-electric buses. In 2005,

oil phase-out in Sweden by 2020 was announced.

United Kingdom

In the

United Kingdom the

Renewable Transport Fuel Obligation (RTFO)

(announced 2005) is the requirement that by 2010 5%

of all road vehicle fuel is renewable. In 2008 a

critical report by the

Royal Society stated that biofuels risk

failing to deliver significant reductions in

greenhouse gas emissions from transport and could

even be environmentally damaging unless the

Government puts the right policies in place.

Brazil

-

In Brazil, the government hopes to build on

the success of the Proálcool ethanol program by

expanding the production of biodiesel which must

contain 2% biodiesel by 2008, increasing to 5% by

2013.

Colombia

Colombia mandates the use of 10% ethanol in all

gasoline sold in cities with populations exceeding

500,000. In

Venezuela, the state oil company is supporting

the construction of 15 sugar cane distilleries over

the next five years, as the government introduces a

E10 (10% ethanol) blending mandate.

USA

-

In 2006, the

United States president

George W. Bush said in a

State of the Union speech that the US is

"addicted to oil" and should replace 75% of imported

oil by 2025 by alternative sources of energy

including biofuels.

Essentially all of the

ethanol fuel in the US is produced from

corn. Corn is a very energy intensive crop,

which requires one unit of fossil-fuel energy to

create just 0.9 to 1.3 energy units of ethanol.[36]

A senior member of the

House Energy and Commerce Committee Congressman

Fred Upton has introduced legislation to use at

least E10 fuel by 2012 in all cars in the USA.

The 2007-12-19 U.S.

Energy Independence and Security Act of 2007

requires American “fuel producers to use at least 36

billion gallons of biofuel in 2022. This is nearly a

fivefold increase over current levels.”[37]

This is causing a significant agricultural resource

shift away from food production to biofuels.

American food exports have decreased (increasing

grain prices worldwide), and US food imports have

increased significantly.

Most biofuels are not currently cost-effective

without significant subsidies. "America's ethanol

program is a product of government subsidies. There

are more than 200 different kinds, as well as a 54

cents-a-gallon tariff on imported ethanol. This

prices Brazilian ethanol out of an otherwise

competitive market. Brazil makes ethanol from

sugarcane rather than corn (maize), which has a

better

EROEI. Federal subsidies alone cost $7 billion a

year (equal to around $1.90 a gallon)."

General Motors is starting a project to

produce

E85 fuel from

cellulose ethanol for a projected cost of $1 a

gallon. This is optimistic however, because $1/gal

equates to $10/MBTU which is comparable to

woodchips at $7/MBTU or

cord wood at $6-$12/MBTU, and this does not

account for conversion losses and plant operating

and capital costs which are significant. The raw

materials can be as simple as corn stalks and scrap

petroleum-based vehicle tires, but used tires are an

expensive feedstock with other more-valuable uses.

GM has over 4 million E85 cars on the road now, and

by 2012 half of the production cars for the U.S.

will be capable of running on E85 fuel, however by

2012 the supply of ethanol will not even be close to

supplying this much E85. Coskata Inc. is building

two new plants for the ethanol fuel. Theoretically,

the process is claimed to be five times more energy

efficient than corn based ethanol, however it is

still in development and has not been proven to be

cost effective in a free market.

The greenhouse gas emissions are reduced by

86% for cellulose compared to corn’s 29% reduction.

Biofuels in developing

countries

Biofuel industries are becoming established in

many developing countries. Many developing countries

have extensive biomass resources that are becoming

more valuable as demand for biomass and biofuels

increases. The approaches to biofuel development in

different parts of the world varies. Countries such

as India and China are developing both bioethanol

and biodiesel programs. India is extending

plantations of

jatropha, an oil-producing tree that is used in

biodiesel production. The Indian sugar ethanol

program sets a target of 5% bioethanol incorporation

into transport fuel China is a major bioethanol

producer and aims to incorporate 15% bioethanol into

transport fuels by 2010. Costs of biofuel promotion

programs can be very high, though.

Amongst rural populations in developing

countries, biomass provides the majority of fuel for

heat and cooking. Wood, animal dung and crop

residues are commonly burned. Figures from the

International Energy Agency show that biomass energy

provides around 30% of the total primary energy

supply in developing countries; over 2 billion

people depend on biomass fuels as their primary

energy source.

The use of biomass fuels for cooking indoors

is a source of health problems and pollution. 1.3

million deaths were attributed to the use of biomass

fuels with inadequate ventilation by the

International Energy Agency in its World Energy

Outlook 2006. Proposed solutions include improved

stoves and alternative fuels. However, fuels are

easily damaged, and alternative fuels tend to be

expensive. Very low cost, fuel efficient, low

pollution biomass stove designs have existed since

1980 or earlier.[43]

Issues are a lack of education, distribution, excess

corruption, and very low levels of foreign aid.

People in

developing countries are often unable to afford

these solutions without assistance or financing such

as

microloans. Organizations such as

Intermediate Technology Development Group work

to make improved facilities for biofuel use and

better alternatives accessible to those who cannot

get them.

Current issues in

biofuel production and use

Biofuels are proposed as having such benefits

as: reduction of

greenhouse gas emissions, reduction of

fossil fuel use, increased national

energy security, increased

rural development and a sustainable fuel supply

for the future.

However, biofuel production is questioned from

a number of angles. The chairman of the

International Panel on Climate Change,

Rajendra Pachauri, notably observed in March

2008 that questions arise on the emissions

implications of that route, and that biofuel

production has clearly raised prices of corn, with

an overall implication for food security.

Biofuels are also seen as having limitations.

The feedstocks for biofuel production must be

replaced rapidly and biofuel production processes

must be designed and implemented so as to supply the

maximum amount of fuel at the cheapest cost, while

providing maximum environmental benefits. Broadly

speaking, first generation biofuel production

processes cannot supply us with more than a few

percent of our energy requirements sustainably. The

reasons for this are described below. Second

generation processes can supply us with more biofuel,

with better environmental gains. The major barrier

to the development of second generation biofuel

processes is their capital cost: establishing second

generation biodiesel plants has been estimated at

€500million.

Recently, an inflexion point about

advantages/disadvantages of biofuels seems to be

gaining momentum. The March 27, 2008

TIME magazine cover features the subject under

the title "The Clean Energy Myth":

Politicians and Big Business are pushing

biofuels like corn-based ethanol as alternatives

to oil. All they’re really doing is driving up

world food prices, helping to destroy the Amazon

jungle, and making global warming worse.

In the June, 2008 issue of the journal

Conservation Biology, scientists argue that because

such large amounts of energy are required to grow

corn and convert it to ethanol, the net energy gain

of the resulting fuel is modest. Using a crop such

as switchgrass, common forage for cattle, would

require much less energy to produce the fuel, and

using algae would require even less. Changing

direction to biofuels based on switchgrass or algae

would require significant policy changes, since the

technologies to produce such fuels are not fully

developed.

Oil price moderation

The

International Energy Agency's World Energy

Outlook 2006 concludes that rising oil demand,

if left unchecked, would accentuate the consuming

countries' vulnerability to a severe supply

disruption and resulting price shock. The report

suggested that biofuels may one day offer a viable

alternative, but also that "the implications of the

use of biofuels for global security as well as for

economic, environmental, and public health need to

be further evaluated".

Economists disagree on the extent that biofuel

production affects crude oil prices. According to

the Francisco Blanch, a commodity strategist for

Merrill Lynch, crude oil would be trading 15 per

cent higher and gasoline would be as much as 25 per

cent more expensive, if it were not for biofuels.

Gordon Quaiattini, president of the Canadian

Renewable Fuels Association, argued that a healthy

supply of alternative energy sources will help to

combat gasoline price spikes. However, the Federal

Reserve Bank of Dallas concluded that "Biofuels are

too limited in scale and currently too costly to

make much difference to crude oil pricing."

Rising food prices —

the "food vs. fuel" debate

-

Main article:

food vs fuel

This topic is internationally controversial.

There are those, such as the National Corn Growers

Association, who say biofuel is not the main cause.

Some say the problem is a result of government

actions to support biofuels. Others say it is just

due to oil price increases. The impact of food price

increases is greatest on poorer countries. Some have

called for a freeze on biofuels. Some have called

for more funding of second generation biofuels which

should not compete with food production so much.

In May 2008 Olivier de Schutter, the

United Nations food adviser, called for a halt

on biofuel investment. In an interview in

Le Monde he stated: "The ambitious goals for

biofuel production set by the United States and the

European Union are irresponsible. I am calling for a

freeze on all investment in this sector." 100

million people are currently at risk due to the food

price increases.

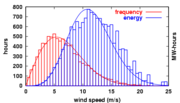

Carbon emissions

Graph of UK figures for the

carbon intensity of bioethanol and

fossil fuels. This graph assumes that all

bioethanols are burnt in their country of

origin and that prevously existing cropland is

used to grow the feedstock.

Biofuels and other forms of

renewable energy aim to be

carbon neutral or even carbon negative. Carbon

neutral means that the carbon released during the

use of the fuel, e.g. through burning to power

transport or generate electricity, is reabsorbed and

balanced by the carbon absorbed by new plant growth.

These plants are then harvested to make the next

batch of fuel.

Carbon neutral fuels lead to no net increases in

human contributions to atmospheric

carbon dioxide levels, reducing the human

contributions to

global warming. A carbon negative aim is

achieved when a portion of the biomass is used for

carbon sequestration. Calculating exactly how

much

greenhouse gas (GHG) is produced in burning

biofuels is a complex and inexact process, which

depends very much on the method by which the fuel is

produced and other assumptions made in the

calculation.

Carbon emissions have been increasing ever

since the industrial revolution. Prior to the

industrial revolution, our atmosphere contained

about 280 parts per million of carbon dioxide. After

burning coal, gas, and oil to power our lives, the

concentration had risen to 315 parts per million.

Today, it is at the 380 level and still increasing

by approximately two parts per million annually.

During this time frame, the global average

temperature has risen by more than 1°F since carbon

dioxide traps heat near the Earth’s surface.

Scientists believe that if the level goes beyond 450

parts per million, the temperature jump will be so

great that we will be faced with an enormous rise in

sea level due to the melting of Greenland and West

Antarctic ice sheets.

The carbon emissions (Carbon

footprint) produced by biofuels are calculated

using a technique called

Life Cycle Analysis (LCA). This uses a "cradle

to grave" or "well to wheels" approach to calculate

the total amount of carbon dioxide and other

greenhouse gases emitted during biofuel production,

from putting seed in the ground to using the fuel in

cars and trucks. Many different LCAs have been done

for different biofuels, with widely differing

results. The majority of LCA studies show that

biofuels provide significant greenhouse gas

emissions savings when compared to fossil fuels such

as petroleum and diesel.[citation

needed] Therefore, using

biofuels to replace a proportion of the fossil fuels

that are burned for transportation can reduce

overall greenhouse gas emissions. The

well-to-wheel analysis for biofuels has shown

that first generation biofuels can save up to 60%

carbon emission and second generation biofuels can

save up to 80% as opposed to using fossil fuels.

However these studies do not take into account

emissions from nitrogen fixation, deforestation,

land use, or any indirect emissions.

In October 2007, a study was published by

scientists from Britain, U.S., Germany and Austria,

including Professor

Paul Crutzen, who won a Nobel Prize for his work

on ozone. They reported that the burning of biofuels

derived from

rapeseed and

corn (maize) can contribute as much or more to

global warming by

nitrous oxide emissions than cooling by fossil

fuel savings. Nitrous oxide is both a potent

greenhouse gas and a destroyer of atmospheric ozone.

But they also reported that crops with lower

requirements for

nitrogen

fertilizers, such as grasses and woody

coppicing will result in a net absorption of

greenhouse gases.

In February 2008, two articles were published

in

Science which investigated the GHG emissions

effects of the large amount of natural land that is

being converted to cropland globally to support

biofuels development. The first of these studies,

conducted at the

University of Minnesota, found that:

...converting rainforests, peatlands,

savannas, or grasslands to produce food-based

biofuels in Brazil, Southeast Asia, and the United

States creates a ‘biofuel carbon debt’ by

releasing 17 to 420 times more CO2 than

the annual greenhouse gas (GHG) reductions these

biofuels provide by displacing fossil fuels.

This study not only takes into account removal

of the original vegetation (as timber or by burning)

but also the biomass present in the soil, for

example roots, which is released on continued

plowing. It also pointed out that:

...biofuels made from waste biomass or from

biomass grown on degraded and abandoned

agricultural lands planted with perennials incur

little or no carbon debt and can offer immediate

and sustained GHG advantages.

The second study, conducted at

Princeton University, used a worldwide

agricultural model to show that:

...corn-based ethanol, instead of producing

a 20% savings, nearly doubles greenhouse emissions

over 30 years and increases greenhouse gases for

167 years.

Both of the Science studies highlight the need

for

sustainable biofuels, using feedstocks that

minimize competition for prime croplands. These

include farm, forest and municipal waste streams;

energy crops grown on marginal lands, and algaes.

These second generation biofuels feedstocks "are

expected to dramatically reduce GHGs compared to

first generation biofuels such as corn ethanol". In

short, biofuels done unsustainably could make the

climate problem worse, while biofuels done

sustainably could play a leading role in solving the

carbon challenge.

Sustainable biofuel

production

-

Responsible policies and economic instruments

would help to ensure that biofuel commercialization,

including the development of new

cellulosic technologies, is

sustainable. Sustainable biofuel production

practices would not hamper food and fibre

production, nor cause water or environmental

problems, and would actually enhance soil

fertitlity. Responsible commercialization of

biofuels represents an opportunity to enhance

sustainable economic prospects in Africa, Latin

America and impoverished Asia.

Soil erosion,

deforestation, and biodiversity

It is important to note that

carbon compounds in waste

biomass that is left on the ground are consumed

by other

microorganisms. They break down

biomass in the soil to produce valuable

nutrients that are necessary for future crops.

On a larger scale, plant biomass waste provides

small wildlife

habitat, which in turn ripples up through the

food chain. The widespread human use of biomass

(which would normally

compost the field) would threaten these

organisms and natural habitats. When

cellulosic ethanol is produced from feedstock

like

switchgrass and

saw grass, the nutrients that were required to

grow the

lignocellulose are removed and cannot be

processed by microorganisms to replenish the soil

nutrients. The soil is then of poorer quality. Loss

of ground cover root structures accelerates

unsustainable

soil erosion.

Significant areas of native

Amazon rainforest have been cleared by

slash and burn techniques to make room for

sugar cane production, which is used in large

part for

ethanol fuel in Brazil, and growing

ethanol exports. Large-scale

deforestation of mature trees (which help remove

CO2 through

photosynthesis — much better than does

sugar cane or most other biofuel feedstock crops

do) contributes to un-sustainable

global warming atmospheric

greenhouse gas levels, loss of

habitat, and a reduction of valuable

biodiversity. Demand for biofuel has led to

clearing land for

Palm Oil plantations.

A portion of the biomass should be retained

onsite to support the soil resource. Normally this

will be in the form of raw biomass, but processed

biomass is also an option. If the exported biomass

is used to produce

syngas, the process can be used to co-produce

biochar, a low-temperature charcoal used as a

soil amendment to increase soil organic matter to a

degree not practical with less recalcitrant forms of

organic carbon. For co-production of biochar to be

widely adopted, the soil amendment and carbon

sequestration value of co-produced charcoal must

exceed its net value as a source of energy.

Impact on water

resources

Increased use of biofuels puts increasing

pressure on water resources in at least two ways:

water use for the irrigation of crops used as

feedstocks for biodiesel production; and water use

in the production of biofuels in refineries, mostly

for boiling and cooling.

In many parts of the world supplemental or

full irrigation is needed to grow feedstocks. For

example, if in the production of corn (maize) half

the water needs of crops are met through irrigation

and the other half through rainfall, about 860

liters of water are needed to produce one liter of

ethanol.

In the United States, the number of ethanol

factories has almost tripled from 50 in 2000 to

about 140 in 2008. A further 60 or so are under

construction, and many more are planned. Projects

are being challenged by residents at courts in

Missouri (where water is drawn from the

Ozark Aquifer), Iowa, Nebraska, Kansas (all of

which draw water from the non-renewable

Ogallala Aquifer), central Illinois (where water

is drawn from the

Mahomet Aquifer) and Minnesota.

Aldehydes

Formaldehyde,

Acetaldehyde and other

Aldehydes are produced when alcohols are

oxidized. When only a 10% mixture of ethanol is

added to gasoline (as is common in American

E10

gasohol and elsewhere), aldehyde emissions

increase 40%. Some study results are conflicting on

this fact however, and lowering the sulfur content

of biofuel mixes lowers the acetaldehyde levels.

Burning biodiesel also emits aldehydes and other

potentially hazardous aromatic compounds which are

not regulated in emissions laws.

Many aldehydes are toxic to living cells.

Formaldehyde irreversibly cross-links

protein

amino acids, which produces the hard flesh of

embalmed bodies. At high concentrations in an

enclosed space, formaldehyde can be a significant

respiratory irritant causing nose bleeds,

respiratory distress, lung disease, and persistent

headaches. Acetaldehyde, which is produced in the

body by alcohol drinkers and found in the mouths of

smokers and those with poor oral hygene, is

carcinogenic and

mutagenic.

The

European Union has banned products that contain

Formaldehyde, due to its documented

carcinogenic characteristics. The U.S.

Environmental Protection Agency has labeled

Formaldehyde as a probable cause of cancer in

humans.

Brazil burns significant amounts of ethanol

biofuel. Gas

chromatograph studies were performed of ambient

air in São Paulo Brazil, and compared to Osaka

Japan, which does not burn ethanol fuel. Atmospheric

Formaldehyde was 160% higher in Brazil, and

Acetaldehyde was 260% higher.

Social and Water

impact in Indonesia

In some locations such as Indonesia

deforestation for Palm Oil plantations is leading to

displacement of Indigenous peoples. Also, extensive

use of pesticide for biofuel crops is reducing clean

water supplies.

Environmental

organizations stance

Some mainstream environmental groups support

biofuels as a significant step toward slowing or

stopping global climate change.[citation

needed] However, biofuel

production can threaten the environment if it is not

done sustainably. This finding has been backed by

reports of the

UN, the

IPCC, and some other smaller environmental and

social groups as the

EEB and

the Bank Sarasin, which generally remain negative

about biofuels.

As a result, governmentaland environmental

organisations are turning against biofuels made at a

non-sustainable way (hereby preferring certain oil

sources as

jatropha and

lignocellulose over

palm oil) and are asking for global support for

this. Also, besides supporting these more

sustainable biofuels, environmental organisations

are redirecting to new technologies that do not use

internal combustion engines such as

hydrogen and compressed air.

The "Roundtable on Sustainable Biofuels" is an

international initiative which brings together

farmers, companies, governments, non-governmental

organizations, and scientists who are interested in

the sustainability of biofuels production and

distribution. During 2008, the Roundtable is

developing a series of principles and criteria for

sustainable biofuels production through

meetings, teleconferences, and online discussions.

The increased manufacture of biofuels will

require increasing land areas to be used for

agriculture. Second and third generation biofuel

processes can ease the pressure on land, because

they can use waste biomass, and existing (untapped)

sources of biomass such as crop residues and

potentially even marine algae.

In some regions of the world, a combination of

increasing demand for food, and increasing demand

for biofuel, is causing deforestation and threats to

biodiversity. The best reported example of this is

the expansion of oil palm plantations in Malaysia

and Indonesia, where rainforest is being destroyed

to establish new oil palm plantations. It is an

important fact that 90% of the palm oil produced in

Malaysia is used by the food industry; therefore

biofuels cannot be held solely responsible for this

deforestation. There is a pressing need for

sustainable palm oil production for the food and

fuel industries; palm oil is used in a wide variety

of food products. The Roundtable on Sustainable

Biofuels is working to define criteria,

standards and processes to promote sustainably

produced biofuels.

Palm oil is also used in the manufacture

of detergents, and in electricity and heat

generation both in Asia and around the world (the UK

burns palm oil in coal-fired power stations to

generate electricity).

Significant area is likely to be dedicated to

sugar cane in future years as demand for ethanol

increases worldwide. The expansion of sugar cane

plantations will place pressure on

environmentally-sensitive native ecosystems

including rainforest in South America. In forest

ecosystems, these effects themselves will undermine

the climate benefits of alternative fuels, in

addition to representing a major threat to global

biodiversity.

Although biofuels are generally considered to

improve net carbon output, biodiesel and other fuels

do produce local air pollution, including

nitrogen oxides, the principal cause of

smog.

Potential for poverty

reduction

Researchers at the

Overseas Development Institute have argued that

biofuels could help to reduce poverty in the

developing world, through increased

employment, wider

economic growth multipliers and energy price

effects. However, this potential is described as

'fragile', and is reduced where feedstock production

tends to be large scale, or causes pressure on

limited agricultural resources: capital investment,

land, water, and the net cost of food for the poor.

With regards to the potential for poverty

reduction or exacerbation, biofuels rely on many of

the same policy, regulatory or investment

shortcomings that impede

agriculture as a route to

poverty reduction. Since many of these

shortcomings require policy improvements at a

country level rather than a global one, they argue

for a country-by-country analysis of the potential

poverty impacts of biofuels. This would consider,

among other things, land administration systems,

market coordination and prioritising investment in

biodiesel, as this 'generates more labour, has

lower transportation costs and uses simpler

technology'.

Biofuel prices

Retail, at the pump prices, including U.S.

subsidies, Federal and state

motor taxes, B2/B5 prices for low-level

Biodiesel (B2-B5) are lower than

petroleum diesel by about 12 cents, and B20

blends are the same per unit of volume as

petrodiesel.

Due to the 1/3 lower energy content of

ethanol fuel, even the heavily-subsidized net

cost to drive a specific distance in

flexible-fuel vehicles is higher than current

gasoline prices.

Energy efficiency and

energy balance of biofuels

Production of biofuels from raw materials

requires

energy (for farming, transport and conversion to

final product, and the production / application of

fertilizers,

pesticides,

herbicides, and

fungicides), and has environmental consequences.

The

energy balance of a biofuel is determined by the

amount of energy put into the manufacture of fuel

compared to the amount of energy released when it is

burned in a vehicle. This varies by feedstock and

according to the assumptions used. Biodiesel made

from sunflowers may produce only 0.46 times the

input rate of fuel energy. Biodiesel made from

soybeans may produce 3.2 times the input rate of

fossil fuels. This compares to 0.805 for gasoline

and 0.843 for diesel made from petroleum. Biofuels

may require higher energy input per unit of

BTU energy content produced than

fossil fuels: petroleum can be pumped out of the

ground and processed more efficiently than biofuels

can be grown and processed. However, this is not

necessarily a reason to use oil instead of biofuels,

nor does it have an impact on the environmental

benefits provided by a given biofuel.

Studies have been done that calculate energy

balances for biofuel production. Some of these show

large differences depending on the biomass feedstock

used and location.

To explain one specific example, a

June 17,

2006 editorial in the Wall. St. Journal stated,

"The most widely cited research on this subject

comes from Cornell's David Pimental and Berkeley's

Ted Patzek. They've found that it takes more than a

gallon of fossil fuel to make one gallon of ethanol

— 29% more. That's because it takes enormous amounts

of fossil-fuel energy to grow corn (using fertilizer

and irrigation), to transport the crops and then to

turn that corn into ethanol."]

Life cycle assessments of biofuel production

show that under certain circumstances, biofuels

produce only limited savings in energy and

greenhouse gas emissions. Fertiliser inputs and

transportation of biomass across large distances can

reduce the GHG savings achieved. The location of

biofuel processing plants can be planned to minimize

the need for transport, and agricultural regimes can

be developed to limit the amount of fertiliser used

for biomass production. A European study on the

greenhouse gas emissions found that

well-to-wheel (WTW) CO2 emissions of

biodiesel from seed crops such as

rapeseed could be almost as high as fossil

diesel. It showed a similar result for bio-ethanol

from starch crops, which could have almost as many

WTW CO2 emissions as fossil petrol. This

study showed that second generation biofuels have

far lower WTW CO2 emissions.

Other independent LCA studies show that

biofuels save around 50% of the CO2

emissions of the equivalent fossil fuels. This can

be increased to 80-90% GHG emissions savings if

second generation processes or reduced fertiliser

growing regimes are used. Further GHG savings can be

achieved by using by-products to provide heat, such

as using

bagasse to power ethanol production from

sugarcane.

Collocation of

synergistic processing plants can enhance

efficiency. One example is to use the exhaust heat

from an industrial process for ethanol production,

which can then

recycle cooler processing water, instead of

evaporating hot water that warms the atmosphere.

Biofuels and solar

energy efficiency

Biofuels from plant materials convert energy

that was originally captured from

solar energy via

photosynthesis. A comparison of conversion

efficiency from solar to usable energy (taking into

account the whole energy budgets) shows that

photovoltaics are 100 times more efficient than

corn ethanol[104]

and 10 times more efficient than the best biofuel

Centralised vs.

decentralised production

There is debate around the best model for

production.

One side sees centralised vegetable oil fuel

production offering

- efficiency

- greater potential for fuel standardisation

- ease of administrating taxes

- possibility for rapid expansion

The other side of the argument points to

- increased fuel security

- rural job creation

- less of a 'monopolistic'

or 'oligopolistic'

market due to the increased number of producers

- benefits to local economy as a greater part

of any profits stay in the local economy

- decreased transportation and

greenhouse gases of feedstock and end product

- consumers close to and able to observe the

effects of production

The majority of established biofuel markets

have followed the centralised model with a few small

or micro producers holding a minor segment of the

market. A noticeable exception to this has been the

pure plant oil (PPO) market in Germany which grew

exponentially until the beginning of 2008 when

increasing feedstock prices and the introduction of

fuel duty combined to stifle the market. Fuel was

produced in hundreds of small oil mills distributed

throughout Germany often run as part of farm

businesses.

Initially fuel quality could be variable but

as the market matured new technologies were

developed that made significantly improvements. As

the technologies surrounding this fuel improved

usage and production rapidly increased with rapeseed

oil PPO forming a significant segment of

transportation biofuels consumed in 2007.

See also

along

the border between Oregon and Washington, carries a

great deal of energy in its flow. So, too, with water

descending rapidly from a very high point, like Niagara

Falls in New York. In either instance, the water flows

through a pipe, or penstock,then pushes against

and turns blades in a turbine to spin a generator to

produce electricity. In a run-of-the-river system,

the force of the current applies the needed pressure,

while in a storage system, water is accumulated

in reservoirs created by dams, then released when the

demand for electricity is high. Meanwhile, the

reservoirs or lakes are used for boating and fishing,

and often the rivers beyond the dams provide

opportunities for whitewater rafting and kayaking.

along

the border between Oregon and Washington, carries a

great deal of energy in its flow. So, too, with water

descending rapidly from a very high point, like Niagara

Falls in New York. In either instance, the water flows

through a pipe, or penstock,then pushes against

and turns blades in a turbine to spin a generator to

produce electricity. In a run-of-the-river system,

the force of the current applies the needed pressure,

while in a storage system, water is accumulated

in reservoirs created by dams, then released when the

demand for electricity is high. Meanwhile, the

reservoirs or lakes are used for boating and fishing,

and often the rivers beyond the dams provide

opportunities for whitewater rafting and kayaking.

released by lowering an object of mass

released by lowering an object of mass

by a height

by a height

in a gravitational field is

in a gravitational field is where

where

is the acceleration due to gravity.

is the acceleration due to gravity.

for

for

and expressing

and expressing

in terms of the volume of liquid moved per unit time

(the

in terms of the volume of liquid moved per unit time

(the

)

and the density of water, we arrive at the usual

form of this expression:

)

and the density of water, we arrive at the usual

form of this expression:

is measured in kg/m³,

is measured in kg/m³,

where

where

is the

is the

where A is the

where A is the

Fast-growing cottonwoods

being harvested for paper and energy.

Fast-growing cottonwoods

being harvested for paper and energy.

Fast-growing hardwoods, such

as hybrid poplars and sycamores, are efficient solar

collectors, gathering the sun's energy and converting it

to useful chemical energy.In four counties in southern Iowa the

harvest may begin far soone Plans are being made to

plant switchgrass on approximately 4,000 acres. The

project is attracting the interest of area power

companies, which could mix the switchgrass with coal to

reduce emissions and expand use of renewable energy.

Fast-growing hardwoods, such

as hybrid poplars and sycamores, are efficient solar

collectors, gathering the sun's energy and converting it

to useful chemical energy.In four counties in southern Iowa the

harvest may begin far soone Plans are being made to

plant switchgrass on approximately 4,000 acres. The

project is attracting the interest of area power

companies, which could mix the switchgrass with coal to

reduce emissions and expand use of renewable energy.